Just having a faster computer is not enough to ensure the right level of performance for dealing with big data. You must be able to distribute the components of a big data service across a series of nodes. In distributed computing, a node is an element that resides within a set of systems or within a rack.

A node usually includes the CPU, memory, and some type of disk. However, a node can also be a blade CPU and memory that depends on in-shelf proximity storage.

In a big data environment, these nodes are usually grouped together to save volume. For example, you might start out with big data analysis and keep adding more data sources. To accommodate growth, an organization simply adds more nodes to the cluster so that it can be expanded to accommodate the growing requirements.

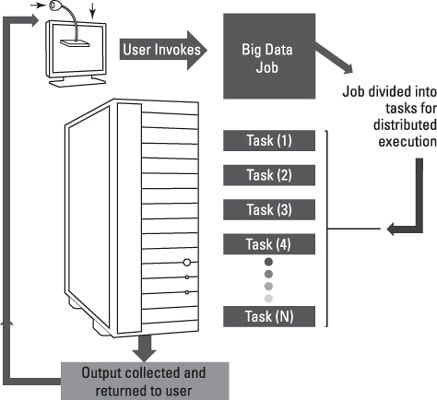

However, it is not enough to simply expand the number of nodes in the cluster. Instead, it is important to be able to send part of the big data analysis to different physical environments. Where you send these tasks and how you manage them makes the difference between success and failure.

In some complex situations, you may want to run many different algorithms in parallel, even within the same cluster, to achieve the desired parsing speed. Why would different big data algorithms be executed in parallel within the same rack? The closer the distributions of functions are to each other, the faster they can be executed.

Although it is possible to distribute big data analysis across networks to take advantage of the available capacity, you should do this type of distribution based on performance requirements. In some cases, processing speed takes a back seat. However, in other cases, getting quick results is the requirement. In this case, you want to make sure that the network functions are close to each other.

In general, the big data environment should be optimized for the analytics task type. Therefore, scalability is the backbone to making big data work successfully. Although it would theoretically be possible to run a big data environment within one big environment, it is not practical.

To understand the scalability needs of big data, one only has to consider cloud scalability and understand both the requirements and the approach. Like cloud computing, big data requires the inclusion of fast networks and inexpensive sets of devices that can be bundled into racks to increase performance. These groups are supported by software automation that enables dynamic scaling and load balancing.

MapReduce design and applications are excellent examples of how distributed computing is making big data operationally visible and affordable. In essence, companies are at one of the unique tipping points in computing where technology concepts come together at the right time to solve the right problems. The combination of distributed computing, hardware-optimized systems, and practical solutions like MapReduce and Hadoop are transforming data management in profound ways.